A few weeks ago, I was talking to another Java programmer who was once a C++ programmer. The conversation gravitated towards data structures, and about how C++ programmers need to consider the best data structure for their particular application and then figure out the best and most optimal way to build it, while Java programmers typically just choose the most optimal data structure for their application from the standard java.util library. In my opinion, this is both a good thing and a bad thing for Java programmers. Its good because you dont have to worry about implementing a Vector for each project (or remember which third party library to include or purchase), and its bad, because it makes you lazy. So lazy, in fact, that when the time comes to implement a data structure that is not available in the Java standard library, or cannot be synthesized by combining Maps and Lists, Java programmers are often at a loss for how to proceed.

This conversation turned out to be prescient, since a few days later, I found myself confronted with programming a business object that just had to be mapped to a Tree data structure. It was a Task object, which can contain other Tasks, which can contain other Tasks, and so on. Since there is no built in Tree data structure in the java.utils package, I had to create my own. This article explains the design and provides the code for a generic Tree structure.

The basic Tree Data Structure

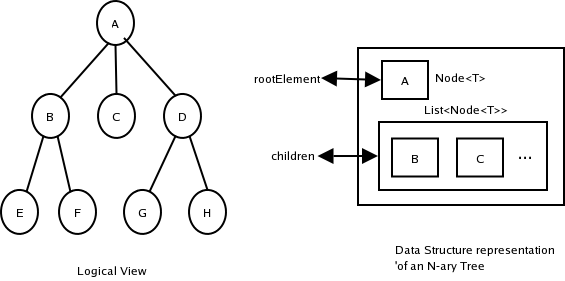

First off, the Tree class itself should be a container class, and should contain elements of type Node. The Node element is a thin wrapper over the actual data content, to which we will assign the generic type T. So our Tree<T> will contain a collection of Node<T> objects, where T is the actual data that we want to represent in our tree structure. The Node is represented as an element of type T, and a List<T> of children of type Node<T>. We use a List object to hold all the children, because we are dealing with an N-ary Tree, ie, we cannot tell in advance how many children a particular Node will have. Here is a diagram showing the logical tree and the corresponding data structure.

The code for the Tree and Node objects follow:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 | /**

* Represents a Tree of Objects of generic type T. The Tree is represented as

* a single rootElement which points to a List<Node<T>> of children. There is

* no restriction on the number of children that a particular node may have.

* This Tree provides a method to serialize the Tree into a List by doing a

* pre-order traversal. It has several methods to allow easy updation of Nodes

* in the Tree.

*/

public class Tree<T> {

private Node<T> rootElement;

/**

* Default ctor.

*/

public Tree() {

super();

}

/**

* Return the root Node of the tree.

* @return the root element.

*/

public Node<T> getRootElement() {

return this.rootElement;

}

/**

* Set the root Element for the tree.

* @param rootElement the root element to set.

*/

public void setRootElement(Node<T> rootElement) {

this.rootElement = rootElement;

}

/**

* Returns the Tree<T> as a List of Node<T> objects. The elements of the

* List are generated from a pre-order traversal of the tree.

* @return a List<Node<T>>.

*/

public List<Node<T>> toList() {

List<Node<T>> list = new ArrayList<Node<T>>();

walk(rootElement, list);

return list;

}

/**

* Returns a String representation of the Tree. The elements are generated

* from a pre-order traversal of the Tree.

* @return the String representation of the Tree.

*/

public String toString() {

return toList().toString();

}

/**

* Walks the Tree in pre-order style. This is a recursive method, and is

* called from the toList() method with the root element as the first

* argument. It appends to the second argument, which is passed by reference * as it recurses down the tree.

* @param element the starting element.

* @param list the output of the walk.

*/

private void walk(Node<T> element, List<Node<T>> list) {

list.add(element);

for (Node<T> data : element.getChildren()) {

walk(data, list);

}

}

}

/**

* Represents a node of the Tree<T> class. The Node<T> is also a container, and

* can be thought of as instrumentation to determine the location of the type T

* in the Tree<T>.

*/

public class Node<T> {

public T data;

public List<Node<T>> children;

/**

* Default ctor.

*/

public Node() {

super();

}

/**

* Convenience ctor to create a Node<T> with an instance of T.

* @param data an instance of T.

*/

public Node(T data) {

this();

setData(data);

}

/**

* Return the children of Node<T>. The Tree<T> is represented by a single

* root Node<T> whose children are represented by a List<Node<T>>. Each of

* these Node<T> elements in the List can have children. The getChildren()

* method will return the children of a Node<T>.

* @return the children of Node<T>

*/

public List<Node<T>> getChildren() {

if (this.children == null) {

return new ArrayList<Node<T>>();

}

return this.children;

}

/**

* Sets the children of a Node<T> object. See docs for getChildren() for

* more information.

* @param children the List<Node<T>> to set.

*/

public void setChildren(List<Node<T>> children) {

this.children = children;

}

/**

* Returns the number of immediate children of this Node<T>.

* @return the number of immediate children.

*/

public int getNumberOfChildren() {

if (children == null) {

return 0;

}

return children.size();

}

/**

* Adds a child to the list of children for this Node<T>. The addition of

* the first child will create a new List<Node<T>>.

* @param child a Node<T> object to set.

*/

public void addChild(Node<T> child) {

if (children == null) {

children = new ArrayList<Node<T>>();

}

children.add(child);

}

/**

* Inserts a Node<T> at the specified position in the child list. Will * throw an ArrayIndexOutOfBoundsException if the index does not exist.

* @param index the position to insert at.

* @param child the Node<T> object to insert.

* @throws IndexOutOfBoundsException if thrown.

*/

public void insertChildAt(int index, Node<T> child) throws IndexOutOfBoundsException {

if (index == getNumberOfChildren()) {

// this is really an append

addChild(child);

return;

} else {

children.get(index); //just to throw the exception, and stop here

children.add(index, child);

}

}

/**

* Remove the Node<T> element at index index of the List<Node<T>>.

* @param index the index of the element to delete.

* @throws IndexOutOfBoundsException if thrown.

*/

public void removeChildAt(int index) throws IndexOutOfBoundsException {

children.remove(index);

}

public T getData() {

return this.data;

}

public void setData(T data) {

this.data = data;

}

public String toString() {

StringBuilder sb = new StringBuilder();

sb.append("{").append(getData().toString()).append(",[");

int i = 0;

for (Node<T> e : getChildren()) {

if (i > 0) {

sb.append(",");

}

sb.append(e.getData().toString());

i++;

}

sb.append("]").append("}");

return sb.toString();

}

}

|

Reading and Writing Trees from Database

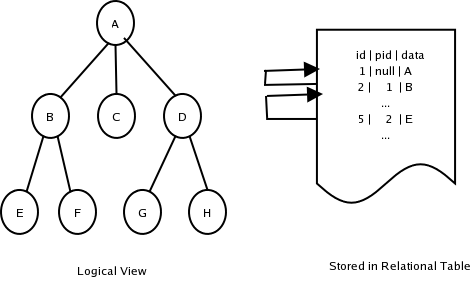

The Tree and Node classes are not very useful by themselves, since what I am after is the ability to store and retrieve my Tasks from a database. I will use Hibernate for my ORM layer. Since Hibernate, to the best of my knowledge, does not work with generic Java code, we create fully specified subclasses out of our generic classes above, and the Task POJO itself. The Task database table itself follows the standard pattern for representing hierarchical data in relational tables, with an embedded parent id that points back to the row's logical parent. The diagram below illustrates this pattern.

And here is the code for the subclasses of the generic Tree and Node classes, the POJO representing the Task object, the Hibernate XML mapping, the abstract base Hibernate DAO class, and the DAO classes for TaskTree and Task.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 | /**

* Non-generic subclass of Tree<Task>

*/

public class TaskTree extends Tree<Task> {

public TaskTree() {

super();

}

}

/**

* Non-generic subclass of Node<T>

*/

public class TaskNode extends Node<Task> {

public TaskNode() {

super();

}

}

/**

* Simple POJO with one to one mapping to database table Task.

*/

public class Task implements Serializable {

private Long id;

private Long parentId;

private String taskName;

private String taskDescription;

private String allocatedTo;

private Integer estimatedHours;

private Integer actualHours;

private String status;

public Task() {

super();

}

public Task(String taskName) {

this();

setTaskName(taskName);

}

public Integer getActualHours() {

return this.actualHours;

}

public void setActualHours(Integer actualHours) {

this.actualHours = actualHours;

}

public String getAllocatedTo() {

return this.allocatedTo;

}

public void setAllocatedTo(String allocatedTo) {

this.allocatedTo = allocatedTo;

}

public Integer getEstimatedHours() {

return this.estimatedHours;

}

public void setEstimatedHours(Integer estimatedHours) {

this.estimatedHours = estimatedHours;

}

public Long getId() {

return this.id;

}

public void setId(Long id) {

this.id = id;

}

public Long getParentId() {

return this.parentId;

}

public void setParentId(Long parentId) {

this.parentId = parentId;

}

public String getStatus() {

return this.status;

}

public void setStatus(String status) {

this.status = status;

}

public String getTaskDescription() {

return this.taskDescription;

}

public void setTaskDescription(String taskDescription) {

this.taskDescription = taskDescription;

}

public String getTaskName() {

return this.taskName;

}

public void setTaskName(String taskName) {

this.taskName = taskName;

}

public String toString() {

return ReflectionToStringBuilder.reflectionToString(this, ToStringStyle.DEFAULT_STYLE);

}

}

|

The Hibernate mapping file for the Task object looks like this:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 | <?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE hibernate-mapping PUBLIC "-//Hibernate/Hibernate Mapping DTD 3.0//EN" "http://hibernate.sourceforge.net/hibernate-mapping-3.0.dtd" >

<hibernate-mapping>

<class name="com.mycompany.beans.Task" table="Task" lazy="false">

<id name="id" type="java.lang.Long" unsaved-value="null">

<generator class="native" />

</id>

<property name="parentId" type="java.lang.Long">

<column name="parentId" />

</property>

<property name="taskName" type="java.lang.String">

<column name="taskName" />

</property>

<property name="taskDescription" type="java.lang.String">

<column name="taskDescription" />

</property>

<property name="allocatedTo" type="java.lang.String">

<column name="allocatedTo" />

</property>

<property name="estimatedHours" type="java.lang.Integer">

<column name="estimatedHours" />

</property>

<property name="actualHours" type="java.lang.Integer">

<column name="actualHours" />

</property>

<property name="status" type="java.lang.String">

<column name="status" />

</property>

</class>

</hibernate-mapping>

|

Finally, in keeping with our thinking that the Tree object should be a collection and concern itself only with navigation, and the Node object should reference the actual object, we create two separate DAO (Data Access Objects). The client will normally reference the TreeDao, and the TreeDao will internally reference the TaskDao as needed. Here is the code for the TreeDao and TaskDao classes. We have also refactored the common code into an abstract class AbstractHibernateDao (which extends Spring's HibernateDaoSupport).

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 | /**

* A base DAO object that needs to be extended by all our individual DAOs. This

* class provides the base functionality to interact with the Hibernate ORM.

* @author Sujit Pal (sujit.pal@comcast.net)

* @version $Revision$

*/

public abstract class AbstractHibernateDao extends HibernateDaoSupport {

public AbstractHibernateDao() {

super();

}

public Serializable save(Object entity) {

return getHibernateTemplate().save(entity);

}

public void saveOrUpdate(Object entity) {

getHibernateTemplate().saveOrUpdate(entity);

}

public void update(Object entity) {

getHibernateTemplate().update(entity);

}

public void remove(Object entity) {

getHibernateTemplate().delete(entity);

}

public Object get(Class clazz, Serializable id) {

return getHibernateTemplate().get(clazz, id);

}

public Object load(Class clazz, Serializable id) {

return getHibernateTemplate().load(clazz, id);

}

public List find(final String hqlQuery, final String[] params, final Object[] paramValues, final Type[] paramTypes, final Integer start, final Integer count) {

return (List) getHibernateTemplate().execute(new HibernateCallback() {

public Object doInHibernate(Session session) throws HibernateException, SQLException {

Query query = session.createQuery(hqlQuery);

int paramsLength = (params == null ? 0 : params.length);

if (params != null) {

if (paramValues == null || paramValues.length != params.length) {

throw new IllegalArgumentException("ParamValues not completely specified for all params");

}

if (paramValues == null || paramTypes.length != params.length) {

throw new IllegalArgumentException("ParamTypes not completely specified for all params");

}

}

for (int i = 0; i < paramsLength; i++) {

query.setParameter(params[i], paramValues[i], paramTypes[i]);

}

if (start == null) {

query.setFirstResult(0);

} else {

query.setFirstResult(start.intValue());

}

if (count == null) {

query.setMaxResults(0);

} else {

query.setMaxResults(count.intValue());

}

return query.list();

}

}, true);

}

}

/**

* Dao for the Task POJO.

*/

public class TaskDao extends AbstractHibernateDao {

private final static Logger log = Logger.getLogger(TaskDao.class);

public TaskDao() {

super();

}

/**

* Remove a Task object from the database.

* @param task the Task to remove.

*/

public void remove(Task task) {

super.remove(task);

}

/**

* Saves a Task to the database if it is a new one, else updates it.

* @param task the Task to insert or update.

* @return the task id.

*/

public Long saveOrUpdate(Task task) {

if (task.getId() == null) {

return (Long) save(task);

} else {

update(task);

return task.getId();

}

}

/**

* Returns the Task pointed to by the task id. Returns a NULL if the

* Task is not found in the database.

* @param taskId the task id to look up.

* @return the Task object corresponding to the id.

*/

public Task get(Long taskId) {

return (Task) super.get(Task.class, taskId);

}

/**

* Returns all the children of the Task which has the specified parent id.

* @param parentId the id of the parent Task.

* @return a List of Tasks which are children of the Task specified.

*/

@SuppressWarnings("unchecked")

public List<Task> findByParentId(Long parentId) {

return super.find("from Task where parentId = :parentId",

new String[] {"parentId"},

new Object[] {parentId},

new Type[] {Hibernate.LONG},

new Integer(0), new Integer(1));

}

/**

* Returns the first task with the specified name. This will typically never * be used directly from the application, mainly for use in tests.

* @param taskName the name of the task to return.

* @return the first task with the specified name.

*/

@SuppressWarnings("unchecked")

public Task findByName(String taskName) {

List<Task> tasks = super.find("from Task where taskName = :taskName",

new String[] {"taskName"},

new Object[] {taskName},

new Type[] {Hibernate.STRING},

new Integer(0), new Integer(1));

return tasks.get(0);

}

}

/**

* Data Access Object for the TaskTree object. This is not a true Dao, since it

* delegates to the TaskDao object for any database operations.

*/

public class TaskTreeDao {

private static final Logger log = Logger.getLogger(TaskTreeDao.class);

private TaskDao taskDao;

public TaskTreeDao() {

super();

}

public void setTaskDao(TaskDao taskDao) {

this.taskDao = taskDao;

}

/**

* Saves a TaskTree object.

* @param taskTree a TaskTree object.

*/

public void saveOrUpdate(TaskTree taskTree) {

List<Node<Task>> tasks = taskTree.toList();

// save the tree in reverse order, starting from the leaf nodes

// and going up to the root of the tree.

int numberOfNodes = tasks.size();

for (int i = numberOfNodes - 1; i >= 0; i--) {

Node<Task> taskElement = tasks.get(i);

Task task = taskElement.getData();

taskDao.saveOrUpdate(task);

Long parentId = task.getId();

for (Iterator<Node<Task>> it = taskElement.getChildren().iterator(); it.hasNext();) {

Node<Task> childElement = it.next();

Task childTask = childElement.getData();

childTask.setParentId(parentId);

taskDao.saveOrUpdate(childTask);

}

}

}

/**

* Gets a single TaskTree by id. This is the head of a recursive method call

* to getRecursive().

* @param id the id of the TaskTree.

* @return a TaskTree for that id.

*/

public TaskTree get(Long id) {

TaskTree taskTree = new TaskTree();

Node<Task> rootElement = new Node<Task>(taskDao.get(id));

getRecursive(rootElement, taskTree);

taskTree.setRootElement(rootElement);

return taskTree;

}

/**

* Recursively descends the Tree and populates the TaskTree object.

* @param taskElement the root Node.

* @param tree the TaskTree object to populate.

*/

private void getRecursive(Node<Task> taskElement, TaskTree tree) {

List<Task> children = taskDao.findByParentId(taskElement.getData().getId());

List<Node<Task>> childElements = new ArrayList<Node<Task>>();

for (Iterator<Task> it = children.iterator(); it.hasNext();) {

Task childTask = it.next();

Node<Task> childElement = new Node<Task>(childTask);

childElements.add(childElement);

getRecursive(childElement, tree);

}

taskElement.setChildren(childElements);

}

}

|

Usage

Using the above classes, if we have built up a TaskTree object, we can save it using the following call:

1 2 3 | TaskTree taskTree = buildUpOurTaskTreeSomehow();

TaskTreeDao taskTreeDao = getReferenceToATaskTreeDao();

taskTreeDao.saveOrUpdate(taskTree);

|

and retrieve it back from the database using this call:

1 2 3 | TaskNode rootElement = getOurRootNodeSomehow();

TaskTreeDao taskTreeDao = getReferenceToATaskTreeDao();

TaskTree taskTree = taskTreeDao.get(rootElement.getId());

|

So, although Java allows the Java programmer to be blissfully unaware of the complexity of building data structures by hand, sometimes it becomes necessary to do so, simply because the data structures provided by Java is not sufficient for the task at hand. There are a number of good books on the subjects, as mentioned in my last blog post, and if you don't want to spend any money, check out The Particle's Guide to Java Data Structures. I've only just skimmed it, and it could do with improvement, but hey, its free.